Install the AWS Command Line Interface (AWS CLI) if you don’t already have it installed. The following steps assume that you already have a valid Python 3 and JupyterLab environment (this extension works with JupyterLab v3.0 or higher). You can follow the same installation steps for an environment hosted in the cloud as well. Prerequisitesįor this post, we assume a locally hosted JupyterLab environment.

#Jupyterlab desktop how to#

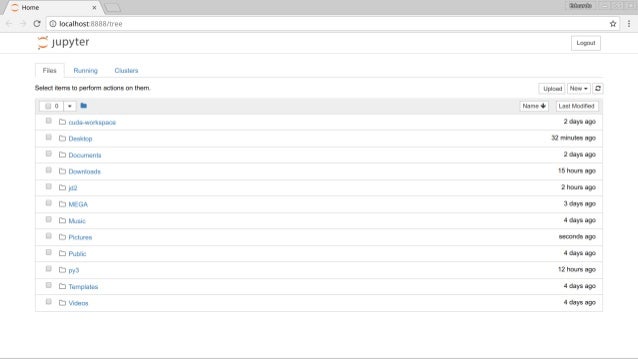

In the following sections, we show how to set up the architecture and install the open-source extension, run a notebook with the default configurations, and also use the advanced parameters to run a notebook with custom settings. In addition to the IAM user and assumed role session scheduling the job, you also need to provide a role for the notebook job instance to assume for access to your data in Amazon Simple Storage Service (Amazon S3) or to connect to Amazon EMR clusters as needed. We discuss the steps for setting up credentials and AWS Identity and Access Management (IAM) permissions later in this post. The SageMaker extension expects the JupyterLab environment to have valid AWS credentials and permissions to schedule notebook jobs. The solution architecture for scheduling notebook jobs from any JupyterLab environment is shown in the following diagram. In this post, we show you how to run your notebooks from your local JupyterLab environment as scheduled notebook jobs on SageMaker. SageMaker provides an open-source extension that can be installed on any JupyterLab environment and be used to run notebooks as ephemeral jobs and on a schedule. You can now use the same capability to run your Jupyter notebooks from any JupyterLab environment such as Amazon SageMaker notebook instances and JupyterLab running on your local machine. To help simplify the process of moving from interactive notebooks to batch jobs, in December 2022, Amazon SageMaker Studio and Studio Lab introduced the capability to run notebooks as scheduled jobs, using notebook-based workflows. To run this job repeatedly on a schedule, you had to set up, configure, and oversee cloud infrastructure to automate deployments, resulting in a diversion of valuable time away from core data science development activities.

#Jupyterlab desktop code#

Migrating from interactive development on notebooks to batch jobs required you to copy code snippets from the notebook into a script, package the script with all its dependencies into a container, and schedule the container to run. Examples of such use cases include scaling up a feature engineering job that was previously tested on a small sample dataset on a small notebook instance, running nightly reports to gain insights into business metrics, and retraining ML models on a schedule as new data becomes available. However, there are scenarios in which data scientists may prefer to transition from interactive development on notebooks to batch jobs. Jupyter notebooks are highly favored by data scientists for their ability to interactively process data, build ML models, and test these models by making inferences on data.

0 kommentar(er)

0 kommentar(er)